A sprinkler watering the lawn, distributing water all-around… Imagine it happening backwards, with droplets from everywhere hitting the bulls-eye.

Swap water with data and you’ll instantly appreciate the job of consolidating information coming in different shapes and sizes into a centralised data hub.

Extract, Transform, Load (ETL) tools provide many generic answers to the water-to-nozzle challenge; however, the water coming back will need a storage tank, and storage needs to be engineered for purpose.

Storage

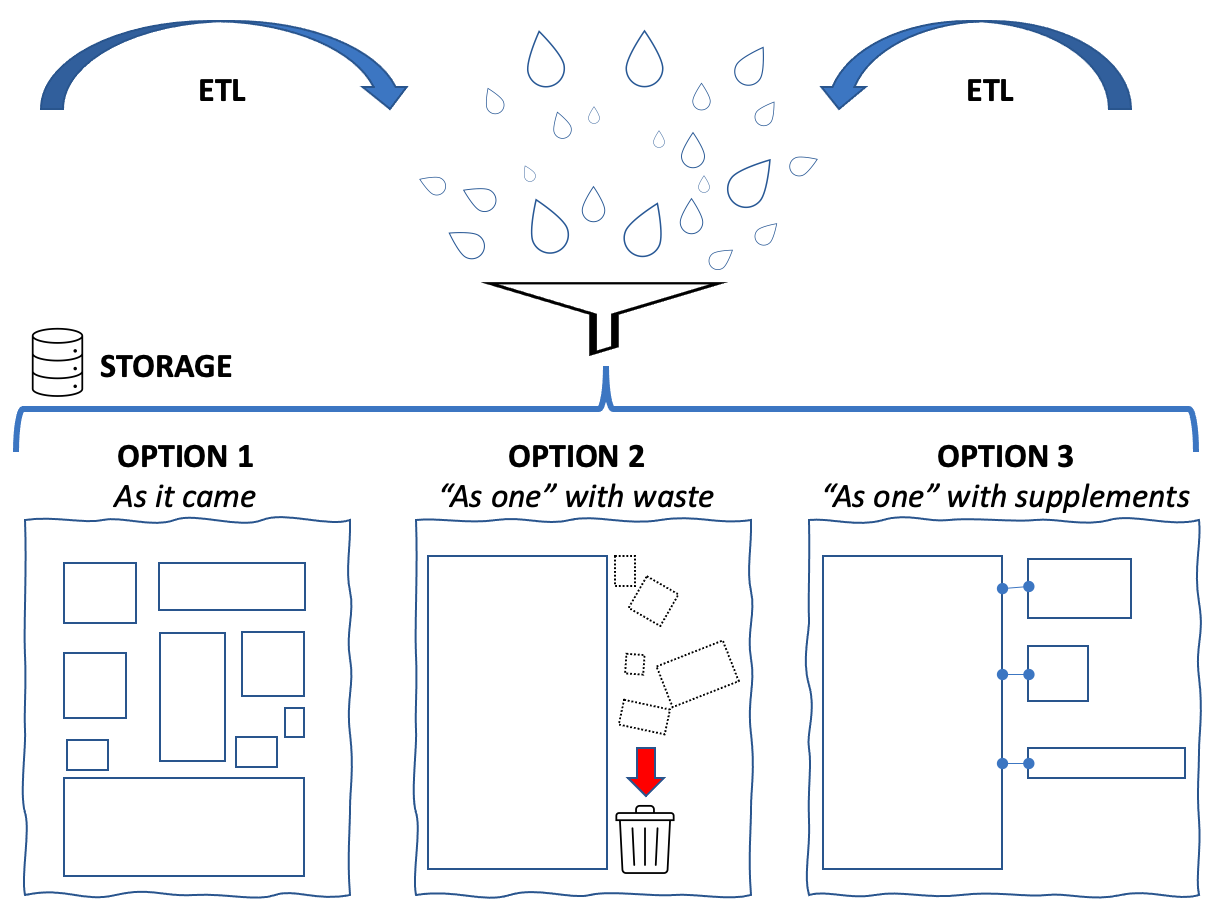

The picture above illustrates three main options to store the information collected into a central warehouse:

- As it came – each incoming set of data is neatly stored away “as is”, perhaps with a little validation / cleanup and tagging for easy recall;

- “As one” with waste – many data sources rationalised into a single one, where the data elements in common are mapped into a single table and the rest is disposed of, e.g.: a “store catalogue”, where separate lists of “books” and “toys” are consolidated (common elements: “item type, item code, description, quantity in stock”; book-specific elements to ditch: “author, publisher, year”; toy-specific elements to ditch: “age-group, theme, dimensions”);

- “As one” with supplements – a more advanced version of 2, where no data is lost, as the specific data elements are stored in item-type-specific tables.

Usage

Stored water requires further treatment to become drinkable. So does centralised data to be become useful.

Intended usage will be a factor in deciding between different storage options: is the purpose static (safekeeping), reference (for analytics), enrichment (centralised housekeeping of augmented data), recycling (outputs from multiple systems, rationalised and centralised, becoming the inputs for another system – see a use case)?

When volume, variety, rate of change of the data sources being consolidated is small, an optimal solution is within easy reach, because experimenting and switching between different options require little effort.

However, the consolidation and use of information from high-volume, diverse, constantly evolving data sources require special attention and special tools.

The LiveDataset Perspective

LiveDataset and “special” go hand in hand. Its rich architecture aims to strike a balance between generic and specific to deliver maximum flexibility for an end-to-end solution.

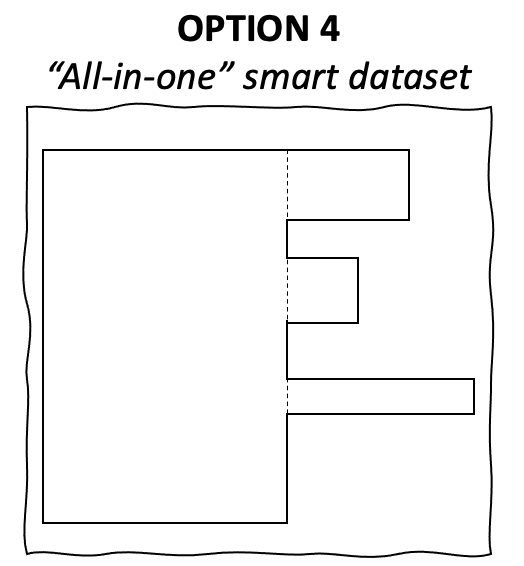

When it comes to storage, the smart use of our document-based database delivers a fourth option:

LiveDataset allows the support of “multi-content” tables, where some elements are “core” (i.e.: store catalogue) and others “item-specific” (i.e.: book-specific or toy-specific). All data in one place, accessible through views designed, according to purpose, to work with either core or item-specific information.

ETL, storage, usage: it’s easy to picture them a three separate engineering challenges. In LiveDataset, these are three parts of the same journey.

The KYC/AML Compliance use case

A good end-to-end example is KYC/AML Compliance Case Management. Specifically, the consolidation, sampling and re-distribution – for Quality Control (QC) and Quality Assurance (QA) – of compliance exceptions, a rolling three-step process:

- ETL & unified storage: regularly (daily, weekly) collecting exceptions, reported in different formats by hundreds of business lines, into a centralised data warehouse;

- Analytics: sampling, without human bias, a subset of cases for QC/QA;

- Case Management: submitting QC/QA scripts (questionnaires) to hundreds of testers to provide feedback for all sampled cases.

This is a space where solutions often start with hundreds of spreadsheets flying over email and are subsequently rationalised with the implementation of three layers of tools to cover each of the steps: a) ETL to enable data consolidation; b) statistical libraries for sampling; c) Case Management for QC/QA.

Where variety and change are dominant features, this layered approach can soon become painful: with separate groups of people being accountable for different components of the infrastructure, challenges end-up being multiplied by three.

LiveDataset cuts through these challenges with an all-in-one solution made of inter-operating components of the same, adaptable platform. A platform that is managed by one support team with end-to-end accountability.

The main benefit? Time: faster delivery, faster adaptability, faster workflows.

–

This piece is part of our Digital Fire Drills series.